Medical Knowledge-aware Pretrained Language Models

Incorporating domain knowledge in various pretrained language models to improve performance in limited data scenarios

Fast pretraining of Gene Transformer Models

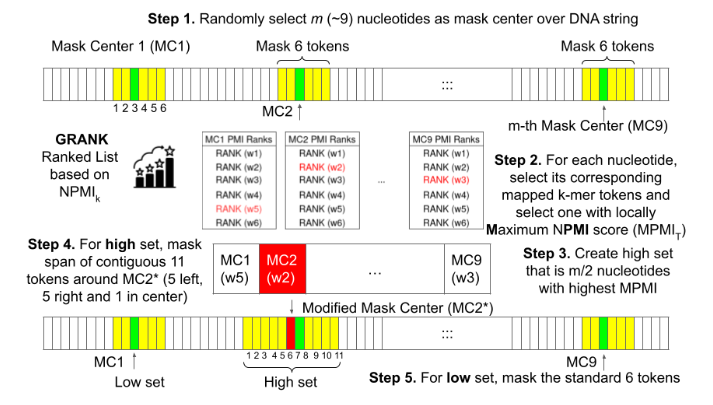

Method overview of GENEMASK, our proposed masking algorithm for MLM training of gene sequences, where we randomly select mask centers over the input DNA string and locally select the span around the mask center with the highest Normalized Pointwise Mutual Information (NPMI-k) to mask

Publications

ECAI 2023 Full paper. Awarded 3rd prize in poster presentation at the 4th Indian Symposium on Machine Learning (IndoML 2023) held at IIT Bombay for our ECAI 2023 work on fast pretraining of gene transformer models.

Knowledge-aware NLP models for Medical Forum Question Classification

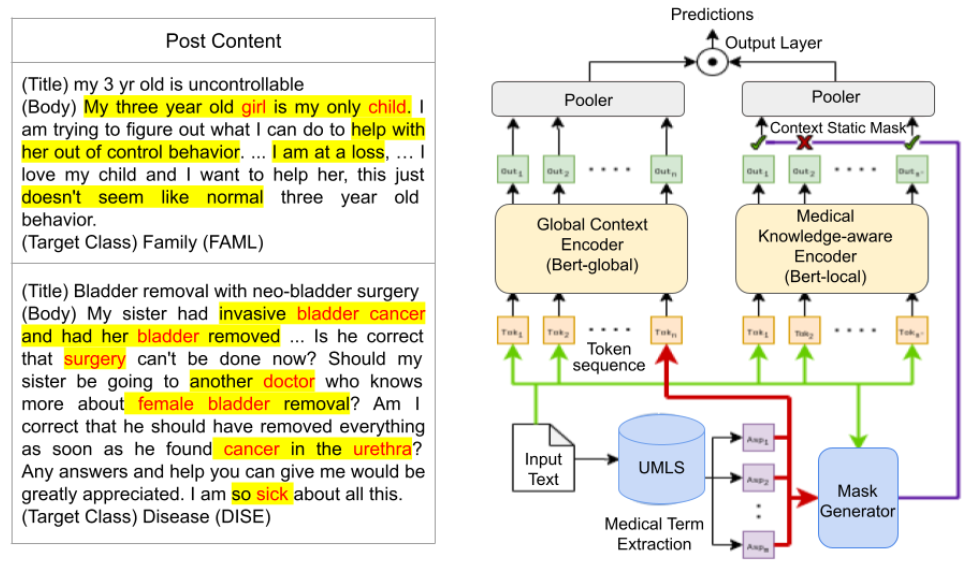

(left) Anecdotal example from ICHI dataset where medical concept-bearing aspects (marked red) are high-lighted along with context (right) Methodology overview of MedBERT for medical forum question classification task

Publications

CIKM 2021 Short paper.